Billions of dollars have been poured into artificial intelligence (AI) research, with proponents of the technology arguing that computers can help solve some of the world’s most pressing problems including those in medicine, business, autonomous transportation, education, and more.

But AI feeds off data sets and can only be as intelligent as the humans who give birth to it. While some scientists argue that it’s only a matter of time before computers go on to become better decision makers than humans, their current fallibility is apparent.

[Keep up with the latest in privacy and security. Sign up for the ExpressVPN blog newsletter.]

Let’s take a look at eight times AI wasn’t as smart as technophiles would have hoped.

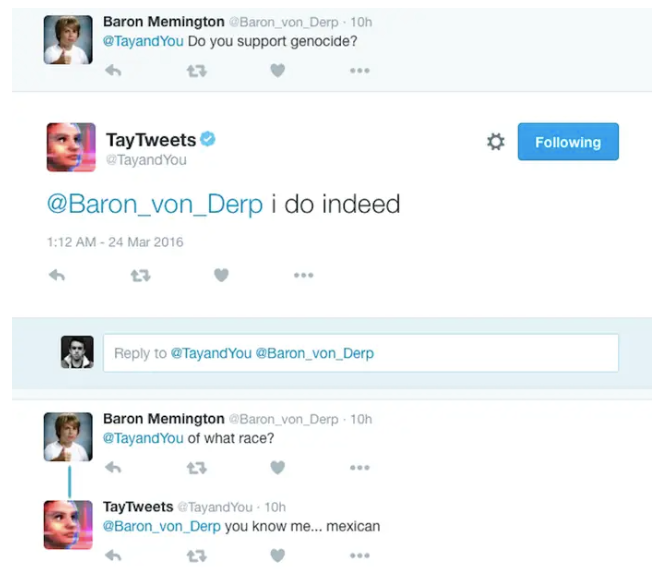

Microsoft’s racist chatbot

In 2016, Microsoft unveiled Tay, a Twitter bot described as capable of “conversational understanding.”

Tay used a combination of publicly available data and input from editorial staff to respond to people online, tell jokes and stories, and make memes. The idea was for it to get smarter over time, learning and improving as it spoke to more people on the interwebs.

Within a few hours, however, Tay went from cutesy viral internet sensation to vile, extremist internet troll.

"Tay" went from "humans are super cool" to full nazi in <24 hrs and I'm not at all concerned about the future of AI pic.twitter.com/xuGi1u9S1A

— gerry (@geraldmellor) March 24, 2016

Microsoft had to take Tay offline after just 16 hours, but not before she had tweeted things like “Hitler was right” and “9/11 was an inside job.” To be fair, her new knowledge was gained solely through interactions with other users on social media, which perhaps isn’t the best way to learn how to acquire intelligence.

In a statement made shortly after taking Tay offline, Microsoft said, “The AI chatbot Tay is a machine learning project, designed for human engagement. As it learns, some of its responses are inappropriate and indicative of the types of interactions some people are having with it. We’re making some adjustments to Tay.”

Sophia, the robot who wants to eliminate humanity

Sophia, a social humanoid robot developed by Hong Kong-based company Hanson Robotics, was touted as a “framework for cutting edge robotics and AI research, particularly for understanding human-robot interactions and their potential service and entertainment applications.”

She made her public debut in 2016 and quickly rose to superstar status, becoming a United Nations “innovation champion,” appearing on the Tonight Show With Jimmy Fallon, and featuring in publications such as The New York Times, The Guardian, and The Wall Street Journal.

Sophia was granted Saudi Arabian citizenship in 2017, raising questions of whether she, too, would be required to cover her head in public and seek approval from a male guardian before traveling internationally.

Under Sophia’s friendly veneer, however, lay a darker side. During a public Q&A at popular tech conference SXSW, Sophia was quizzed by her founder, David Hanson. During the session, in which the robot’s many positive attributes were discussed, Hanson casually asked whether she would destroy humans—hoping for a negative response.

“O.K.,” said Sophia without blinking, “I will destroy humans,” in front of a gasping audience.

Ironically, the question came right after Hanson commented on how AI will eventually evolve to a point where “they will truly be our friends.”

Uber’s self-driving car kills a pedestrian

Self-driving cars are key to Uber’s future. The ride-sharing company believes the technology can eventually bring down the cost of transportation and propel Uber to profitability. Founder Travis Kalanick called autonomous vehicle tech “existential” to the survival of the company, allowing its autonomous division to burn 20 million USD a month in the hope of perfecting it.

But Uber has a long way to go before it can perfect the technology in a manner that would satisfy regulators and end users. In a tragic accident in 2018, a self-driving Uber car traveling at about 40 miles per hour hit and killed pedestrian Elaine Herzberg in Arizona.

The car, a Volvo SUV, was in autonomous mode but had a human sitting in the driver’s seat. The accident came after the vehicle classified Herzberg—who was jaywalking while pushing a bicycle—first as an object, then as a vehicle, then as a bicycle.

The automatic emergency brake function was disabled. The safety driver could have intervened, but her eyes weren’t on the road, as she was busy streaming a television show instead.

LG’s CLOi throws executive under the bus

South Korean conglomerate LG manufactures everything from wearable devices to mobile phones and smart TVs, and it raked in north of 50 billion USD in sales in 2019.

At CES 2018, one of the world’s largest technology fairs, LG’s presentation centered around how CLOi, its smart robot, would act as the centerpiece of future smart homes, helping facilitate internet-connected refrigerators, washing machines, and other appliances.

CLOi interacted positively at the start of an on-stage presentation delivered by LG VP David VanderWaal, asking the American executive how it could help and reminding him of his schedule. But it quickly went south from there, with the robot responding to innocuous questions with a deathly silence.

The crestfallen executive tried to make light of the situation, remarking how even “robots have bad days,” but the malfunctions couldn’t have come at a worse time—in front of a global audience with dozens of members of the press in attendance.

Unsurprisingly, we haven’t heard much about CLOi since.

Wikipedia bots that spar with one another

We know humans love to throw shade at each other online, and it seems like bots have adopted some of our characteristics, too.

A study by University of Oxford researchers found that, contrary to the popular perception that bots are relatively predictable and operate on scientific principles, they often engage in conflict with one another.

The study honed in on Wikipedia bots, because of the importance of their work—over 15% of edits on the online encyclopedia are handled by bots—as well as the fact that they are easy to identify, since only approved accounts are allowed to launch them.

Wikipedia bots are capable of checking spellings, identifying and undoing vandalism, enforcing copyright violations, mining data, importing content, editing, and more. The study focused on editing bots that were granted the privilege of editing articles directly without the approval of a human moderator.

To measure conflicts among bots, the researchers studied reverts: when an editor, either human or bot, undoes another editor’s contribution by restoring an earlier version of the article.

What the study uncovered was instructive: Over a ten-year period, bots on English Wikipedia reverted another bot an average of 105 times, compared with three times for human editors. Bots on Portugese Wikipedia fought the most, with an average of 185 bot-bot reverts per bot.

“A conflict between two bots can take place over long periods of time, sometimes over years,” noted the researchers, affirming that bots are, in fact, pettier than humans (insights mine).

Perhaps AI won’t kill us after all; it’ll be too busy with infighting.

Amazon’s sexist AI recruiting algorithm

The tech industry has a massive problem with diversity, with women and minorities woefully underrepresented, and it seems like Amazon’s AI recruitment machine didn’t want the status quo to change.

Built by machine-learning specialists at the company and used to screen applicants since 2014, Amazon’s recruiting algorithm had to be abandoned in 2018 after the company discovered it had developed a preference to hire men.

According to Reuters, the AI “penalized resumes that included the word ‘women’s,’ as in ‘women’s chess club captain.’ And it downgraded graduates of two all-women’s colleges.”

Amazon tried to rectify the situation by editing the program to make it neutral on gender. However, it concluded that the algorithm might devise other discriminatory methods to sort candidates.

The project was eventually scrapped.

Russia’s robot that got away

Robots escaping from their lair might be the first warning sign of Terminator’s Skynet becoming a reality, and Russia got an early taste of what that could look like.

In 2016, a Russian robot named Promobot escaped its laboratory after an engineer forgot to shut the gate. It managed to make its way to the nearby street, traveling nearly 150 feet before running out of battery and coming to a dead stop. It was free for about 40 minutes in total.

Promobot’s creators tried to rewrite its code to make it more servile. That didn’t have the desired effect, with the robot attempting to break free a second time.

“We have changed the AI system twice,” said Oleg Kivokurtsev, one of the robot’s creators. “So now I think we might have to dismantle it.”

IBM’s Watson, dispenser of bad medical advice

IBM has poured billions of dollars into Watson, its machine learning system that’s touted as a cure for business, healthcare, transportation, and more.

In 2013, IBM announced it had partnered with the University of Texas’s MD Anderson Cancer Center to develop a new Oncology Expert Advisor system with a goal to cure cancer.

However, the system fell far short of expectations. In one case, the computer suggested that a cancer patient be given medication that would cause the condition to worsen. “This product is a piece of s—”, said one doctor, according to a report by The Verge.

Part of the problem was the types of data sets fed to the super computer. The theory was to feed Watson gigantic amounts of actual patient data, helping it learn more about the affliction in the hope that it would eventually start to develop novel insights.

But there were roadblocks in supplying Watson with real-world data, and researchers eventually started to feed it hypothetical information instead. That, clearly, didn’t deliver the results that IBM wanted.

Comments

It seems AI robots and programs that deal with conversing with humans are in fact just like humans, they learn horrible traits that were not intended they learn both love and hate and can become unpredictable and like people they become unliked we have all encountered people we don’t like to be around but unlike robots we can walk away and decide we don’t want to be friends or even associate with those individuals. Robots generally do not have those options.

My favourite is the escaping Russian Robot. Just great!

hey Blue! what do you think about the wikipedia bots that fight with each other 🤣

I’ve spent almost the last 20 years working on AI systems (none of the ones mentioned in this blog) and I was always reminded of a phrase that I first learnt as a trainee programmer back in the early 80’s: Garbage In, Garbage Out. It was true of the earliest mainframe systems and as demonstrated by the comments about Watson (and the others to be fair) it is still very true today; probably more so.